The Power of Deep Learning for Computer Vision: From Pixels to Understanding

Computer vision, the field of artificial intelligence that enables computers to interpret and understand visual data, has witnessed a remarkable transformation over the past few years with the advent of deep learning.

Revolutionizing Computer Vision with Deep Learning has become a hot topic, as this technology has demonstrated remarkable accuracy and performance in a variety of applications. In this article, we will explore the impact of deep learning on various computer vision tasks, including object detection, image segmentation, and pose estimation. We will also delve into the concept of transfer learning, which has enabled deep learning models to be trained on smaller datasets, and examine some real-world applications of deep learning for computer vision. Finally, we will discuss some of the future directions in deep learning for computer vision, including emerging areas of research and potential advancements in the field.

topic list:

- Revolutionizing Computer Vision with Deep Learning

- Object Detection with Deep Learning

- Image Segmentation with Deep Learning

- Pose Estimation with Deep Learning

- Transfer Learning for Computer Vision Tasks

- Real-World Applications of Deep Learning for Computer Vision

- Future Directions in Deep Learning for Computer Vision

Revolutionizing Computer Vision with Deep Learning

Computer vision tasks like object detection, image segmentation, and pose estimation have become significantly more accurate and efficient since the advent of deep learning. The key to this revolution lies in the ability of deep learning models to automatically learn the relevant features of images through training, without relying on handcrafted features.

Object Detection with Deep Learning

Deep learning models for object detection typically use a convolutional neural network (CNN) to extract features from an image, followed by a region proposal network (RPN) that suggests potential object locations. The CNN and RPN are then combined to produce the final object detection results. This approach has proven to be highly effective, with state-of-the-art performance on several object detection benchmarks.

What is a convolutional neural network (CNN)?

Imagine you are trying to identify objects in a picture, but you have never seen these objects before. You start by looking at different parts of the picture, and you notice some interesting features like edges, colors, and textures. You then use these features to piece together what the objects might be. For example, you might see a round shape, two pointy ears, and a wagging tail, and guess that it’s a dog.

A CNN works in a similar way. It’s like a detective that looks for clues in a picture to identify what’s in it. The convolution layer is like a magnifying glass that zooms in on different parts of the picture, and the filters are like different lenses that help the detective see different features like edges, shapes, and colors. The ReLU layer is like a filter that only lets certain features through, and the pooling layer is like a sieve that filters out unnecessary information. Finally, the fully connected layer is like the detective’s brain that takes all the clues and tries to identify what’s in the picture.

By training the CNN on a large dataset of labeled images, the detective learns to recognize different patterns and features in pictures, just like how you would learn to recognize different objects over time. This allows the CNN to accurately predict what’s in a picture, even if it has never seen it before.

What is a Region Proposal Network (RPN) ?

Imagine you are an art curator, and you have a bunch of pictures of different objects, but you don’t know which ones are important to display in your gallery. You need a way to quickly identify the most interesting objects and decide which ones to keep.

The Region Proposal Network (RPN) is like a team of art experts who scan through all the pictures and identify the most important objects. The RPN looks at different parts of the picture and tries to identify regions that might contain objects. It does this by sliding a small window over the picture and calculating a score for each region based on how likely it is to contain an object.

Just like how an art expert might use different criteria to judge the importance of an object, the RPN uses different features to score each region, such as edges, corners, and textures. It then ranks the regions based on their score and suggests the top regions as potential objects of interest.

This process of scanning and selecting regions is repeated multiple times, each time refining the selection process to identify even more accurate regions. Finally, the RPN outputs a set of regions that are most likely to contain objects, which can then be further processed and classified by another part of the neural network.

Stay up to date on new AI tool releases

Image Segmentation with Deep Learning

Deep learning models for image segmentation use an encoder-decoder architecture, where the encoder extracts high-level features from an image and the decoder generates a pixel-level segmentation mask. This approach can produce highly accurate segmentations, particularly for complex objects with irregular shapes.

What is Encoder-Decoder architecture?

Imagine you are trying to teach a computer to colorize black and white photos. The computer needs to learn how to identify the different objects and textures in the photo and then apply appropriate colors to them.

The Encoder-Decoder architecture in deep learning is like a team of painters who work together to colorize the photo. The encoder is like a painter who looks at the black and white photo and tries to identify the different features and patterns in it, such as edges, shapes, and textures. The encoder then converts the photo into a new format that is easier for the decoder to understand.

The decoder is like another painter who understands the new format and can use it to colorize the photo. The decoder looks at the encoded photo and tries to identify the different objects and textures in it. It then applies appropriate colors to each object and texture, using a set of learned color palettes and styles.

The encoder and decoder work together in a feedback loop, refining the encoding and decoding process until the colored photo looks realistic and accurate. This process is like a team of painters working together to paint a picture, with each painter adding their own unique style and perspective to the final product.

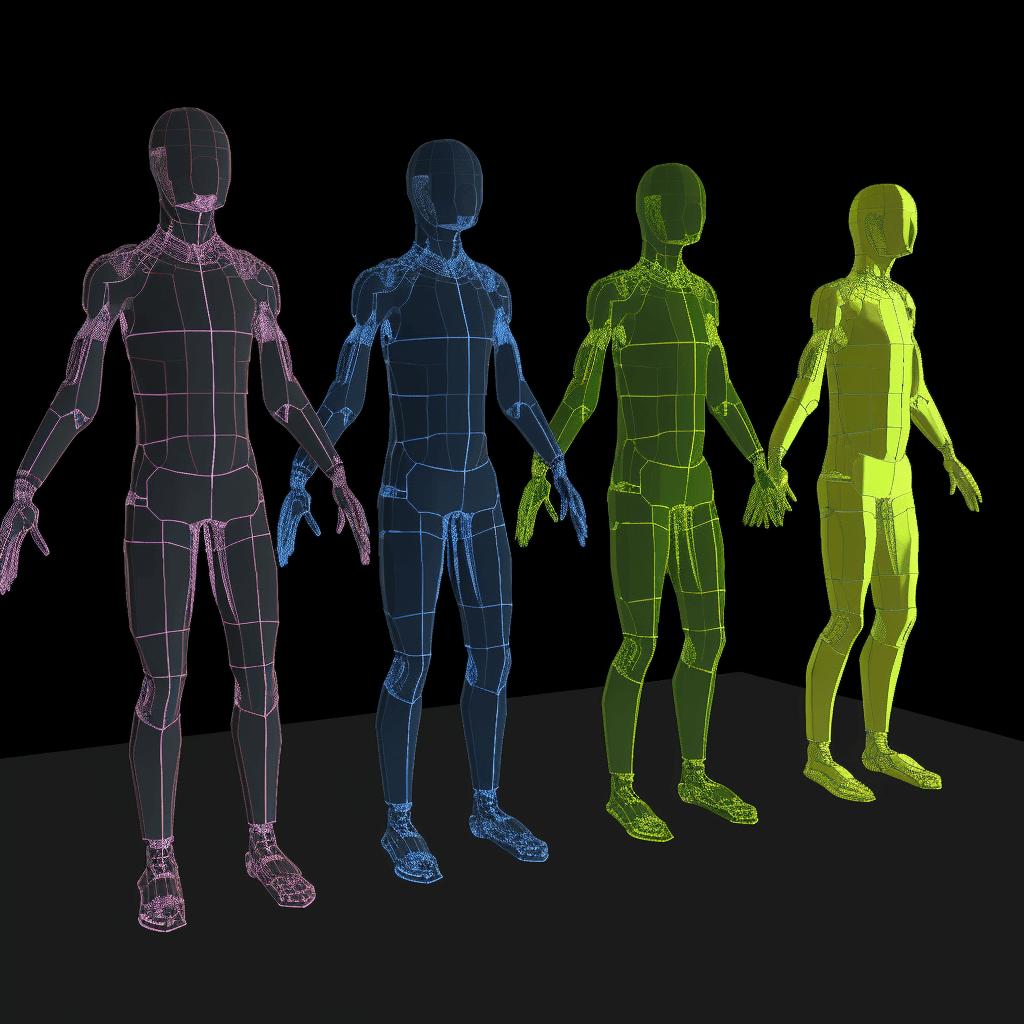

Pose Estimation with Deep Learning

Deep learning models for pose estimation typically use a CNN to extract features from an image, followed by a regression or classification network that predicts joint locations or poses. This approach has proven effective for both human and animal pose estimation, with applications in sports performance analysis and behavioral research.

What is Pose Estimation?

Imagine you are trying to teach a robot how to dance. The robot needs to learn how to move its arms, legs, and body in a specific sequence to perform a dance routine.

Pose Estimation with Deep Learning is like a dance teacher who shows the robot how to move. The deep learning model looks at a series of images or video frames and tries to identify the different body parts and how they move in relation to each other.

Just like how a dance teacher might break down a dance routine into individual moves, the deep learning model breaks down the image into individual body parts and tries to estimate their position and orientation. It does this by using a set of learned features and patterns, such as edges, textures, and colors, to detect each body part.

The model then combines the information from each body part to estimate the overall pose of the person in the image or video frame. This information can be used to track the person’s movements over time, such as in a dance routine, or to identify specific gestures or poses in the image.

The model can be trained on a large dataset of labeled images or video frames, where the poses are annotated or labeled by humans. This allows the model to learn how to accurately estimate poses in different contexts and under different lighting and camera conditions.

Transfer Learning for Computer Vision Tasks

One major advantage of deep learning models for computer vision is their ability to transfer knowledge learned from one task to another. For example, a CNN trained on a large image classification dataset can be fine-tuned for a specific object detection or segmentation task, leading to significant improvements in performance.

Real-World Applications of Deep Learning for Computer Vision

The applications of deep learning for computer vision tasks are numerous and varied. They range from medical image analysis and autonomous driving to industrial quality control and wildlife conservation. Deep learning has proven to be particularly effective in scenarios where traditional computer vision approaches struggle, such as detecting objects in cluttered or occluded environments.

10 Real-World Applications of Deep Learning for Computer Vision in short bullet points:

Autonomous vehicles: Deep learning is used to recognize objects in the environment, such as other vehicles, pedestrians, and traffic signs, to enable safe navigation.

Medical imaging: Deep learning is used to detect and classify abnormalities in medical images, such as X-rays and MRIs, to assist with diagnosis and treatment.

Face recognition: Deep learning is used to identify and verify individuals based on facial features, which has applications in security and surveillance.

Agriculture: Deep learning is used to analyze crop health and growth patterns, as well as to identify and classify pests and diseases, to improve yields and reduce losses.

Robotics: Deep learning is used to enable robots to perceive and interact with their environment, such as identifying objects, grasping and manipulating them, and navigating through complex spaces.

Quality control: Deep learning is used to detect defects and anomalies in manufacturing processes, such as identifying faulty products on an assembly line, to improve quality and reduce waste.

Retail: Deep learning is used to analyze customer behavior and preferences, such as identifying the products they are interested in and recommending relevant products to them.

Security: Deep learning is used to identify suspicious behavior and detect threats, such as recognizing weapons or identifying potential threats in crowded public spaces.

Gaming: Deep learning is used to create more realistic and immersive gaming experiences, such as enabling intelligent non-player characters and realistic physics simulations.

Sports analytics: Deep learning is used to analyze and interpret sports data, such as player performance and team strategy, to improve training and performance.

Stay up to date on new AI tool releases

Future Directions in Deep Learning for Computer Vision

As deep learning continues to advance, new architectures and techniques are emerging for tackling more complex computer vision tasks. For example, generative models such as GANs and VAEs can be used for image synthesis and inpainting, while attention mechanisms are being explored for improving feature selection and visualization. The future of computer vision looks bright with deep learning leading the way.

What are General Adversarial Networks (GAN) ?

GANs are composed of two deep neural networks: a generator and a discriminator. The generator learns to generate new data, such as images or audio, that is similar to the training data, while the discriminator learns to distinguish between the generated data and the real data. The two networks are trained together in a adversarial setting, where the generator tries to fool the discriminator into thinking that the generated data is real, while the discriminator tries to correctly classify the real and generated data. Through this process of competition, the generator learns to generate more realistic data, while the discriminator becomes better at distinguishing between real and generated data.

What is a Variational AutoEncoder (VAE) ?

VAEs are another type of generative model that are based on the idea of an autoencoder. An autoencoder is a neural network that learns to compress and decompress data. VAEs use a similar architecture, but also learn a probabilistic model of the data, which allows them to generate new data that is similar to the training data. VAEs work by encoding the input data into a lower-dimensional representation, called the latent space, and then generating new data by sampling from this latent space and decoding it back into the original data space. By training the VAE on a large dataset of examples, the model learns to generate new data that is similar to the training data.

Both GANs and VAEs have many applications, such as generating realistic images, synthesizing new music or speech, and creating new designs or layouts. They are also used in data augmentation, where new training data is generated to improve the performance of other models.

Keep reading

How to create Mortal Kombat with AI characters video

The process detailing on how to make a Mortal Kombat…

Read More